AI Trainer: Autoencoder Based Approach for Squat Analysis and Correction

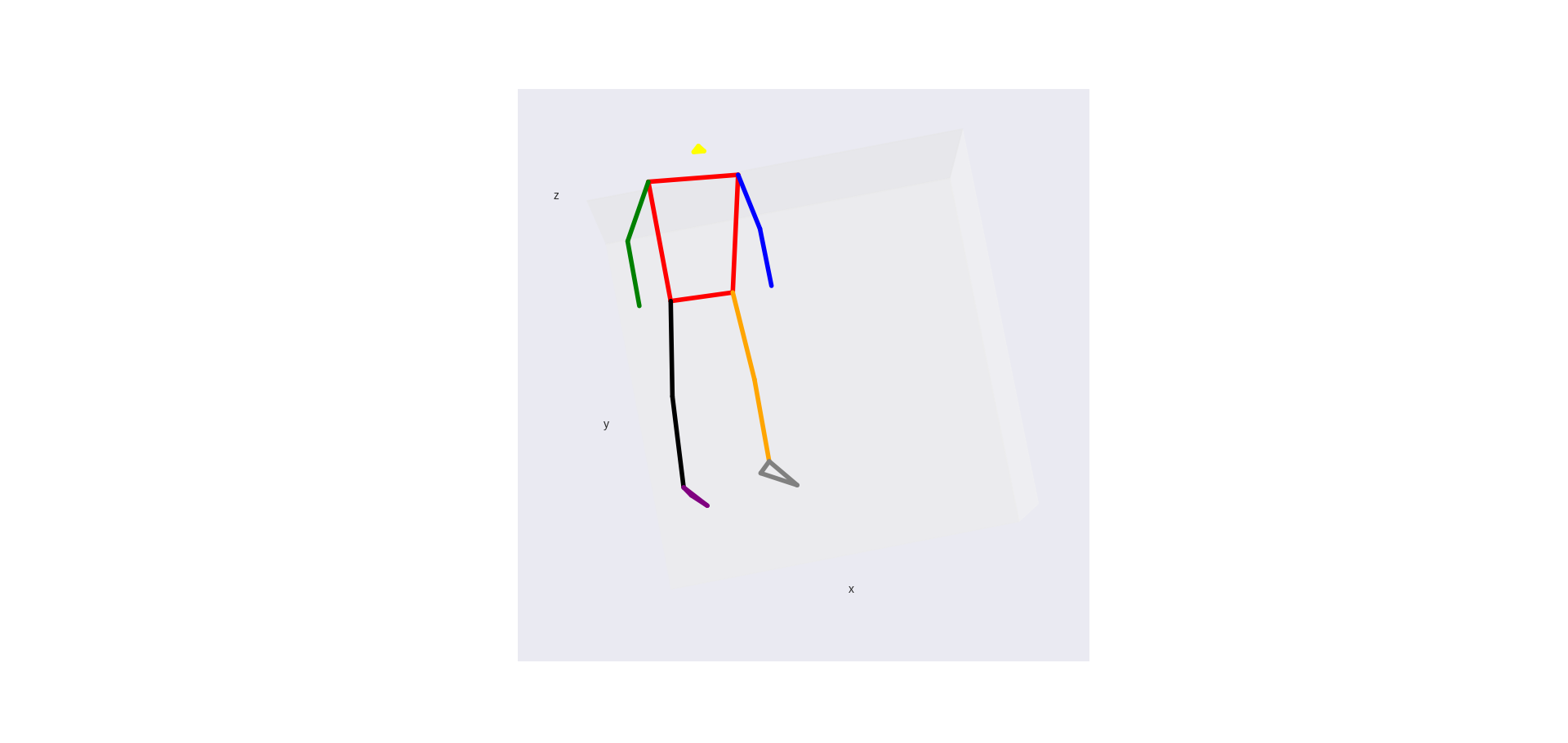

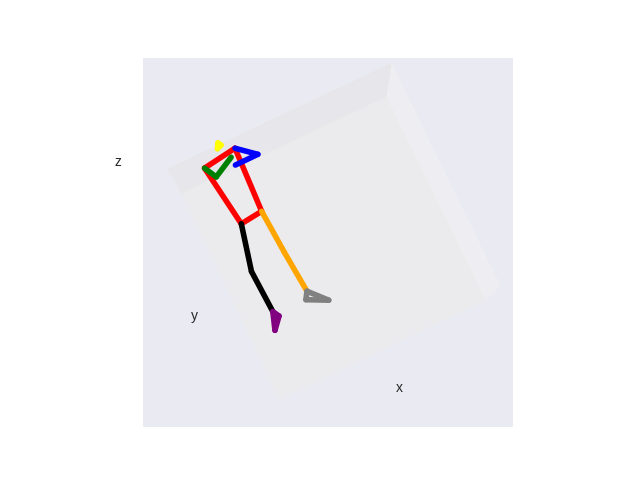

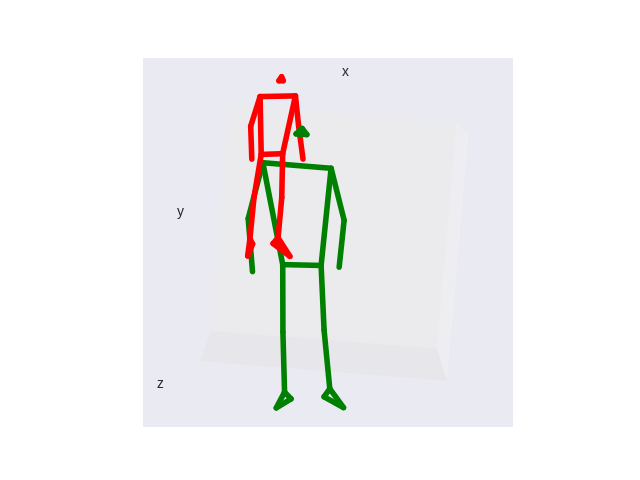

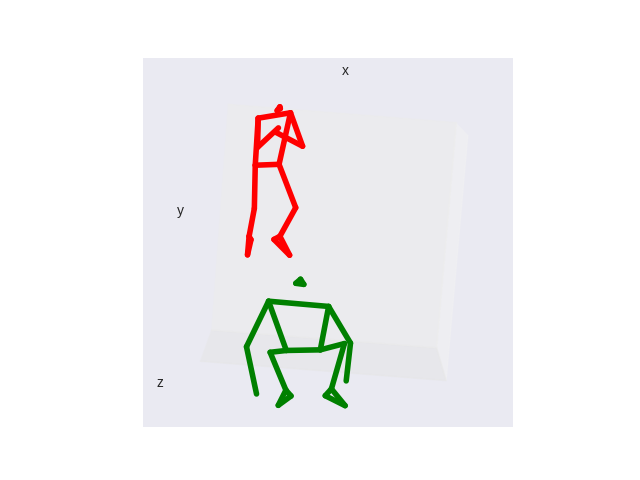

This project uses deep learning and computer vision to evaluate squat performance and provide corrective feedback. We designed a Bi-GRU model with attention for accurate classification across 7 squat types, reaching 94% accuracy. Data was collected using a custom multi-camera setup from 40 participants with varied motion patterns. My role involved designing the data collection apparatus, feature extraction pipeline, and training/testing the neural model.

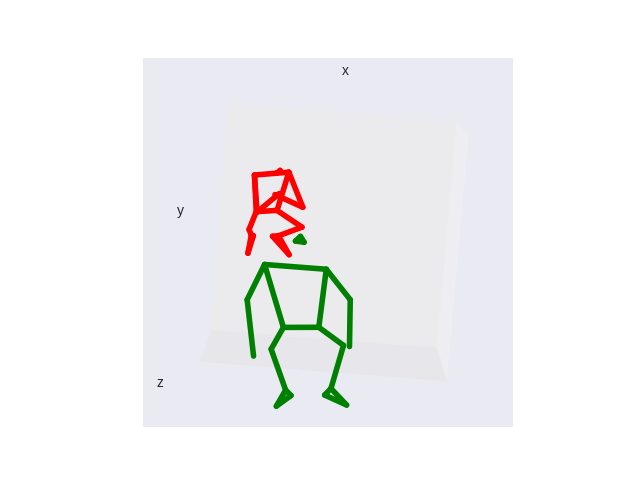

.png)